Skip to main content

MPEG Immersive Media Technology

MPEG Immersive Media Technology

Thomas Stockhammer (Qualcomm Incorporated)

On behalf of MPEG

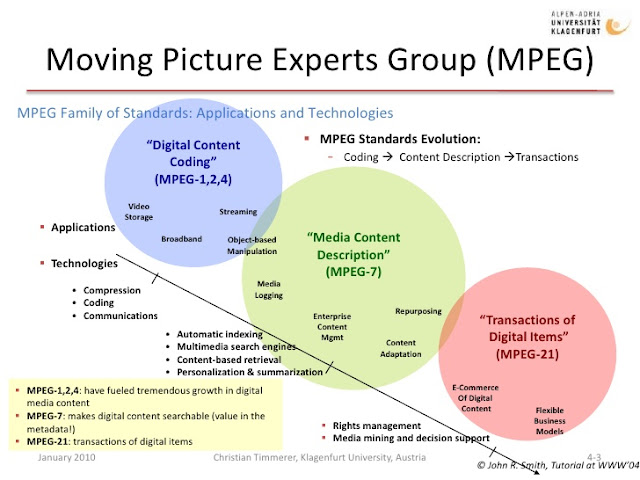

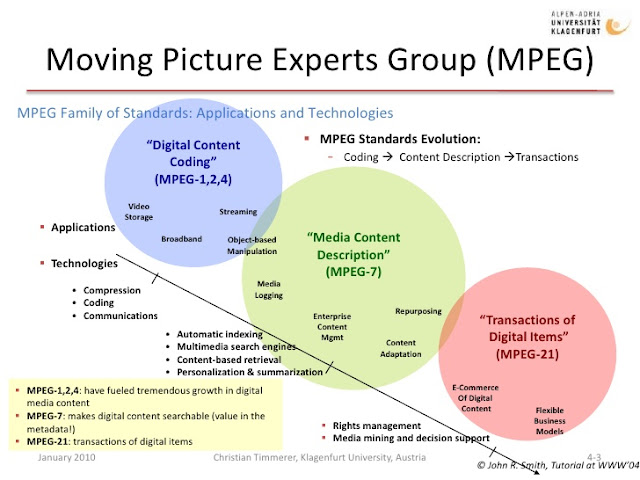

MPEG

2

- Some joint work with ITU-T, e.g.

HEVC

- Participants are accredited by their

national organization, i.e. country

- Development of specifications

follows a due process structure;

voting conducted by country

- Usually meets 3

-4 times per year;

roughly 400 experts attend each

meeting

MP20 Standardisation Roadmap

- Enabled Digital

Television

- Enabled music

distribution

servces

- Enabled HD Distribution Services

- Enabled Media Storage and Streaming

- Enables Custom

fonts on Web & in

Digital Publishing

- Unifies HTTP

streaming protocols

- Infrastructure for

New Forms of

Digital Television

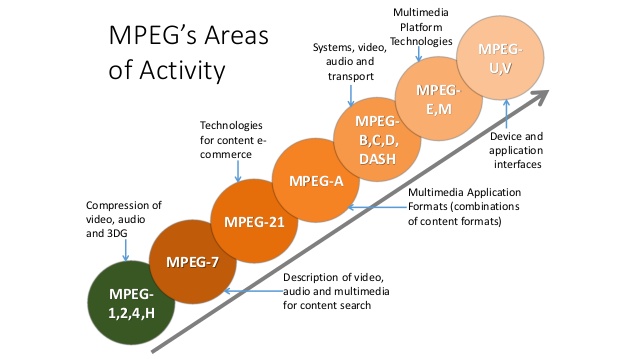

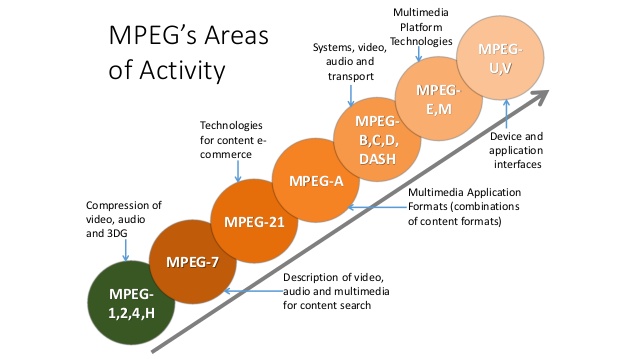

MPEG’s Areas

of Activity

Questions to MPEG’s Customers

- Which needs do you see for media standardisation, between now

and years out?

- What MPEG standardisation roadmap would best meet your needs?

- To accommodate your use cases, what should MPEG's priorities be

for the delivery of specific standards? For example, do you urgently

need something that may enable basic functionality now, or can you

wait for a more optimal solution to be released later?

Program of Workshop Jan 18th, 2017

- Leonardo Chiariglione, MPEG

- Rob Koenen; José Roberto

Alvarez, MPEG

- DVB VR Study Mission Report

- Video formats for VR: A new

opportunity to increase the content

value… But what is missing today?

- Ralf Schaefer, Technicolor

- Today's and future challenges with new

forms of content like 360°, AR and VR

- The Immersive Media Experience Age

- Massimo Bertolotti, Sky Italia

- Final Remarks, Conclusion

What is virtual reality?

- Virtual Reality is a rendered

environment (visual and

acoustic, pre

-dominantly

real

-world) providing an

immersive experience to a

user who can interact with it

in a seemingly real or

physical way using special

electronic equipment (e.g.

display, audio rendering and

sensors/actuators)*

*MPEG’s definition)

Elements of VR from MPEG point of view

360° degree video

- Multiple view with

continuous parallax +

6 DoF

Immersive audio

- Projection of audio waveforms in more natural way

- Listener receives audio signal coherent with his/her position

Signaling and carriage of a/v media

Summary of MPEG VR

Questionnaire Results

Introduction

- In August and September 2016, MPEG conducted an informal Survey to better

understand the needs for standardisation in support for VR applications and

services.

- This document summarises the results of the Survey

- The Summary does not list individual comments; these have been analysed by

MPEG and are reflected in the Conclusions, which are also included in this

Survey

- This result summary can be distributed to interested parties. It has also been

sent to the Respondents.

Instructions given to respondents

- ISO/IEC SC29/WG11, also known as the Moving Picture

Experts Group (MPEG), is aware of the immense interest of

several industry segments in content, services and products

around Virtual Reality (VR). In order to address market needs,

MPEG has create the following survey.

- In order to provide some context, consider the following

definition for Virtual Reality: "Virtual Reality is a rendered

environment (visual and acoustic, pre-dominantly real-world)

providing an immersive experience to a user who can interact

with it in a seemingly real or physical way using special

electronic equipment (e.g. display, audio rendering and

sensors/actuators)."

- MPEG believes that VR is a complex ecosystem and that

already deployed technologies can begin to fulfill the very

high commercial expectations on VR services and

applications, but standards-based interoperability for certain

aspects around VR is required. Therefore MPEG is in the

process of identifying those technologies that are relevant to

market success in order to define an appropriate

standardization roadmap. The technologies considered

include, but are not restricted to, video and audio coding and

compression, metadata, storage formats and delivery

mechanisms.

- This questionnaire has been developed with the goal of

obtaining feedback from the industry on the technologies

whose standardisation may have a positive impact on VR

adoption by the market. The questionnaire will be closed on

23rd September 2016. Should this deadline not be

manageable for you, please contact the organizers and we

will attempt to accommodate your request for a possible

extension.

- Please attempt rate/answer all items in the questions.

However, while we seek complete answers, we are also

interested in receiving partially filled out questionnaires.

- Please use the comment box below a question if you wish to

make a comments or suggestions. You may also use the

comment box at the very end of the questionnaire for general

comments. The use of comment boxes is encouraged because

they help us disambiguate your answers to our questions.

- Thank you for participating in our survey. Your feedback is

important.

- The Chairs of the MPEG Virtual Reality Ad-hoc Group

Business are you in?

Most relevant devices?

Typical Content Duration?

Deployment timelines?

How about standards?

What Hurdles/Obstacles?

Major Cost Factors?

Who Selects Technology?

Most Important Delivery Means?

What Motion-Photon Latency vs.

Bitrate is required?

- A very elaborate question that not everyone completed. A rough summary is as

follows

- At least 10 -20 Mbit/s required at 5 msec

- 20 - 40 Mbit/s sec required at 10 msec

- No consensus at 20 msec (100 Mbit?)

- Never good enough at 50 msec or higher

Video Production Formats

in the near Future?

HEVC (including extensions) sufficient?

Quality Issues with 360 / 3 Degrees of Freedom?

Minimum Ingest Format Reqs?

- A very elaborate question that not everyone completed. A rough summary

- 30 fps inadequate, although maybe at 6k and up …

- 60 fps acceptable at 4K and up

- 90 fps and higher perhaps doable at HD; good enough at 4k+

Minimum Reqs per Eye?

- A very elaborate question that not everyone completed. A rough summary

- 30 fps never good enough, well, maybe at 8k and up?

- 60 fps usable at 4k and up

- 90 fps and higher clearly usable at 4k and up, but not at HD

3D Audio for VR in near future?

(max 3 answers)

MPEG-H 3D Audio Sufficient for Initial Deployments?

Which Quality Issues with 3D Audio?

(max 4 answers)

What Specs should MPEG Create?

Conclusions

- There is a significant interest in having standards

- An analysis learns that there is no significant difference between MPEG participants and non-participants

- Question 14 teaches that MPEG deliver compression tools, make for a less fragmented technology space; and should support short motion-to-photon delay

- The focus is now on 360 Video with 3 Degrees of Freedom (monoscopic or stereoscopic)

- There is a clear interest in 6 Degrees of Freedom.

- Broadcast is considered an interesting business model by a significant amount of respondents.

- This raises the question if broadcasting brings specific requirements, and whether broadcast as a service also implies broadcast as a distribution model. Most respondents seem convinced that adaptive streaming is the best way to distribute VR content.

- Adaptive streaming is considered very important

- There is also an understanding that it needs to get better, i.e. more adaptive to viewing direction (in terms of motion to photon delay)

- Most respondents believe that HEVC is useful, but a significant amount believe that extensions may be desired or required, e.g. in tiling support, or the use of multiple decoders.

- No clear picture emerges on quality requirements for video, although it is clear that very high resolutions are desired. Current VR quality is not yet enough for a good experience, and MPEG should provide tools that enable higher quality.

- Respondents also indicate that MPEG-defined projection methods are desirable.

- Coding technologies will be required to support experiences with 6 degrees of freedom

- Many respondents did not have an opinion on Audio, but those that did, think that the required tools are available.

- There is a need to look at the interaction between projection mapping and video coding, and to find optimal solutions.

- Question 16 shows us that requirements from those who create the content are important, as content creators are seen as an important factor in determining what tools are used.

- The survey gives a fairly uniform picture when it comes to deployment timelines

- Commercial Trials: 2016 and 2017, then levelling off

- Initial Commercial Launch: 2017/2018

MPEG-i Project

Immersive Media in MPEG

Phase 1a

- Timing is what guides this phase

- To deliver a standard for 3DoF 360 VR in the given timeframe (end 2017 or maybe early 2018)

- Aim for a complete distribution system

- Based on OMAF activity; using OMAF timelines;

- A 3D Audio profile of MPEG-H geared to a 360 Audiovisual experience with 3 DoF,

- Basic 360 streaming, and if possible optimizations (e.g., Tiled Streaming)

- Adequate tiling support in HEVC (may already exist) and projection, monoscopic and stereoscopic

- MPEG should be careful not to call this MPEG VR, as the quality that can be delivered in the given timeframe may not be enough.

Phase 1b

- Mainly motivated by desire by a significant part of respondents to launch commercial services in 2020

- Deploy in 2020; spec ready in 2019, (which may match 5G deployments)

- Extension of 1a; focus very likely still on VR 360 with 3 DoF (again monoscopic and stereoscopic)

- If there are elements that could not be included in phase 1a, improving quality – it is not a foregone conclusion that there will be a phase 1b, and if there is such a phase, it is to be further defined what this would comprise

- E.g., optimization in projection mapping

- E.g., further motion-to-photon delay reductions

- Optimizations for person-to-person communications

- Phase 1b should have some quality definition and verification

Phase 2

- A specification that is ready in 2021 or maybe 2022

- This would be a “native” VR spec (“MPEG VR”)

- Goal is support for 6 DoF

- Most important element probably new video codec with support for 6 DoF; to be decided by Video Group what tools are most suitable

- Audio support for 6 degrees of freedom

- Systems elements perhaps required too in support of 6 DoF, as well as 3D graphics.

Phase 1 Baseline Technology Omni-directional Media Application Format and others

Basic framework of VR

- There can different media formats (codec and metadata), and different signaling and transmission protocols

- There can be different camera settings, with different optical parameters

- What is rendered needs to be immersive to the user

- High pixel quantity and quality, broad FOV, stereoscopic display

- High resolution audio, 3D surround sound

- Intuitive interactions: minimal latency, natural UI, precise motion tracking

- There can be different stitching and projection mapping algorithms

- There can be different video codecs and different encoding schemes

Work already underway or completed

- Omni-directional Media Format

- DASH extensions for streaming VR and signaling ROI

- HEVC enhanced for flexible tiling

- Experiments for 360° stereo + 3 DoF video

- Audio completed for 3 DoF

- Experiments for many formats of projection mappings and necessary signaling

OMAF – when

- Will probably be the first industry standard on virtual reality (VR)

- MPEG started looking at VR and started the OMAF project in Oct. 2015

- Technical proposals started in Feb. 2016

- First working draft (WD) as an output of the Jun. 2016 MPEG meeting

- CD after Jan 2017 MPEG meeting, FDIS late 2017

OMAF – what

- The focus of the first version of OMAF would be 360o video and associated audio

- File format encapsulation and metadata signalling

- Extensions to ISO base media file format (ISOBMFF) needed

- DASH encapsulation and metadata signalling

- Extensions to DASH needed

- Codec and coding configurations

- Guidelines of viewport dependent VR media processing, based on either of the following

- Viewport dependent video encoding and decoding

- Viewport dependent projection mapping

OMAF architecture

- DASH media requesting and reception

- Multiple-sensors-captured video or audio

- Coded video or audio bitstream

- Projection mapping etc. metadata

- Metadata info of current FOV

- Formats to be standardized in OMAF: E/F/G, incl. signalling of M1 in F/G.

- For audio, the entire stitching process is not needed, and D is the same as B.

Sphere – equi-rectangular

Cylinder

Cube map

Icosahedron

Truncated pyramid

- For VR to be really immersive, bandwidth and processing complexity remain two of the biggest changes

- At any moment, only a portion of the entire coded sphere video is rendered

Viewport dependent video processing

- To tackle the bandwidth and processing complexity challenges

- To utilize the fact that only a part of entire encoded sphere video is rendered at any moment

- Two areas can be played with to reduce needed bandwidth and to reduce video decoding complexity

- To play with (viewport dependent) video encoding, transmission, and decoding

- To play with a viewport dependent projection mapping scheme

VR/360 video encoding and decoding – conventional

- Each picture is in a format after a particular type of projection mapping, e.g., equi-rectangular or cube-map

- The video sequence is coded as a single-layer bitstream, with TIP used

- The entire bitstream is transmitted, decoded, and rendered

- Temporal inter prediction (TIP)

Simple Tiles based Partial Decoding (STPD)

- The video sequence is coded as a single-layer bitstream, with TIP and motion-constrained tiles used.

- Only a part of bitstream (the minimum set of motionconstrained tiles covering the current viewport/FOV) is transmitted, decoded, and rendered.

- Compared to the conventional scheme

- Lower decoding complexity (or higher resolution under the same decoding complexity)

- Lower transmission bandwidth

- Same encoding and storage costs

- The latency between user head turning and user seeing the new viewport is really problematic today

- Transmiss ion and decoding

- Temporal inter prediction (TIP)

Preliminarily bandwidth comparison of SLPD#1 vs Conventional

Elements of Phase 2 Studies, Exploration, etc.

Six degrees -of-freedom

- Part of MPEG’s vision for native VR

- May require entirely new video codec (TBD)

- Point cloud activity already underway

Point clouds

- Natural and computer generated content, 3D meshes

- Efficient compression for storage, streaming, and download

- CfP to be issued Jan 2017

Free navigation

- Capture of converging or diverging views from camera arrays

- Viewer can freely choose the desired view

Goals and devices for light fields

- Future light field head mounted displays

- Free navigation, e.g. sporting events

- Super multi-view display or light field display

Next steps for light field video

- Free navigation experiments with various camera array configurations.

- Testing with plenoptic video and highly dense camera array video test material.

- One solution may be to extend JPEG Pleno’s support of static light field images.

- Consider viewer fatigue, motion sickness, eye strain, coherent sensory fusion.

Comments

Post a Comment